Most Customs administrations devote a significant portion of their resources to training and competency development activities. The development and delivery of training also feature among the core activities of the WCO.[1] But how can these training activities be assessed to find out whether they are producing the expected results and impact? This very issue was raised at the 2021 session of the Capacity Building Committee, where the WCO Secretariat was requested to develop a comprehensive and consistent evaluation model that could be applied to WCO training activities. This article presents the method and tools proposed by the Secretariat, as well as their resource implications.

A process which begins before the training delivery starts and continues thereafter

To evaluate the impact of a training activity, the WCO Secretariat currently assesses only the satisfaction level of the participants, with a few exceptions. This may suffice for training activities delivered as a “one-off intervention”, but this is wholly insufficient in the context of tailor-made and long-term capacity building programmes. In this regard, trainers, programme managers and, in some cases, donors need to understand which parts of a training programme are effective and which are ineffective or irrelevant, and to be able to determine where improvements could be made.

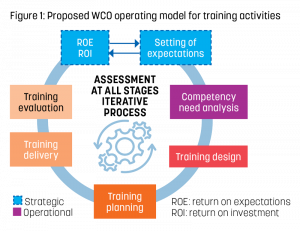

Figure 1 illustrates a possible WCO operating model for such training activities. It provides for an iterative process and evaluation at all stages, and is results- and impact-based. The initial step that would guide the whole process involves setting and agreeing on the beneficiary’s expectations.

Through this approach to training, the evaluation supports and drives the training design, planning and delivery phases. It helps in calibrating both the administration’s expectations and the trainer’s objectives and service delivery. On that basis, the evaluation of training should be a process that begins before the training delivery starts and continues thereafter. If it were not so, there would be very little to evaluate.

For evaluation to be effective, it should focus on specific aspects of performance change that can be directly attributed to the training effort. Prior to the course conception and development, outcomes and indicators must be defined. In other words, it is necessary to specify what knowledge, skills and behaviours the participants are intended to acquire, as well as what they are expected to do differently after the training is completed. The evaluation process will be based on a clear description of those elements and involves collecting and analysing information/data at different stages to determine whether or not the aims of the training have been achieved and to decide on future activities. Reporting tools and templates are to be used for collecting this data.

WCO Training and Development Evaluation Framework

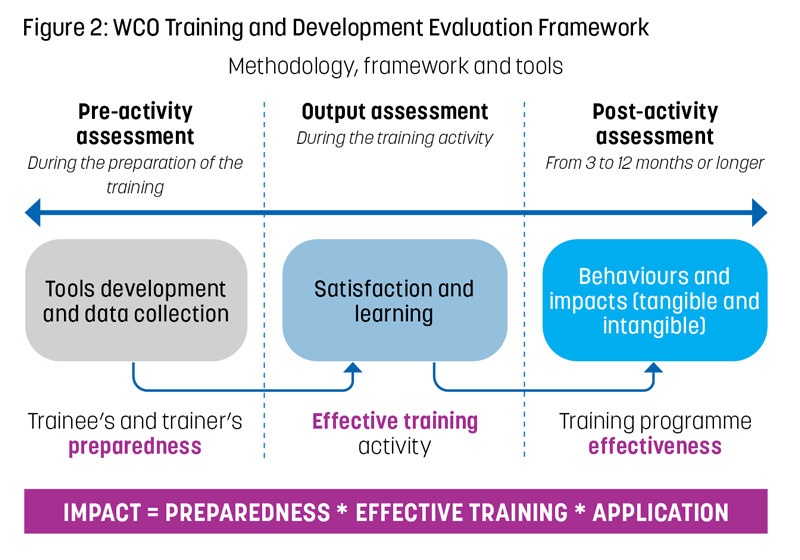

With all these considerations in mind, the WCO Secretariat has developed a Training and Development Evaluation Framework (TDEF) intended to provide a comprehensive and standardized approach to evaluating WCO training activities. As illustrated in Figure 2, the framework comprises three key phases of evaluation, each requiring the involvement of a number of stakeholders.

All phases work together, with each one complementing the others. The first phase relates to the “pre-activity assessment” and will help to assess the trainee’s and trainer’s level of preparedness. During this phase, activities must be conducted in parallel with the setting of stakeholder expectations, a needs analysis and the training design.

The second phase – called the “output assessment” – takes place during the training delivery stage and focuses on the evaluation of both trainee and trainer responses and the learning outcomes of the participants.

The third phase, referred to as the “post-activity assessment”, serves to measure training effectiveness per se. In practical terms, the tools used during this phase evaluate the participant’s level of application of the learning in the workplace and the extent to which this has impacted his or her job performance and, by a knock-on effect, organizational performance.

Implementing such a framework requires resources to be allocated to the WCO Secretariat. This will involve the establishment of a Monitoring and Evaluation Unit with dedicated staff, whose responsibilities will include the development of a WCO training strategy aligned with the WCO Strategic Plan, and digital tools to collect data and enable a smooth and efficient reporting process. It is also necessary to establish a Training and Development Advisory Board to oversee the training system.

There is, moreover, a need to review and strengthen the WCO’s expert accreditation policy through the implementation of a competency evaluation process. All trainers, whether they are national experts or Secretariat staff, should also regularly attend retraining and upskilling programmes to ensure the continuous development of their skills.

Finally, alongside the Monitoring and Evaluation Unit, all parties involved in the training will be required to take ownership of the proposed approach. From Secretariat staff, accredited experts and donor representatives to training participants and their supervisors, they will all have an important role to play in its implementation.

All the tools to evaluate WCO training and development activities will be compiled into a Guide which will be presented and discussed at the next Capacity Building Committee early 2023.

More information

Capacity.building@wcoomd.org

[1] According to the WCO corporate plan, over 85% of the total number of activities conducted each year involve training and development.